Deep Learning Specialization:

Master Deep Learning, and Break into AI

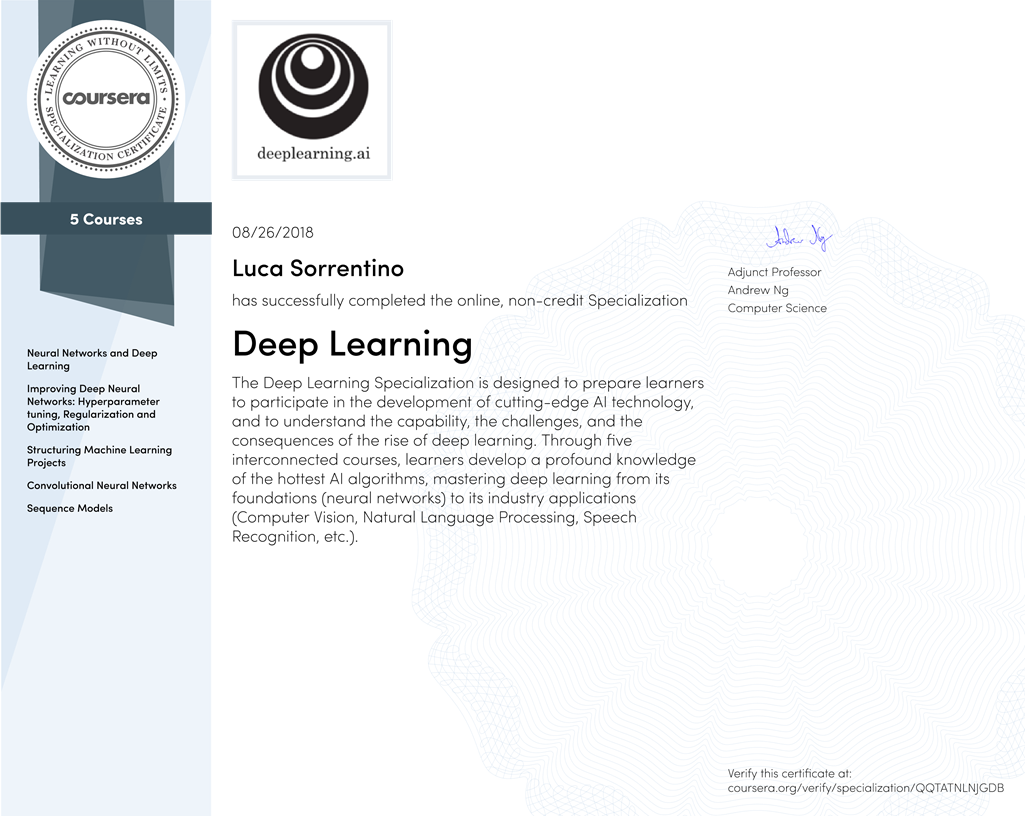

Realized in 2018 Tags: machine learning, coursera, deep learning, tensorflow, neural network

This is the second part of Go Deep In Deep Learning PT2: Deep Learning Specialization. As announced at the end of the previous article, once completed the introduction course at Andrew's Machine Learning I started his specialization course called Deep Learning Specialization on Coursera. The course is structured in 5 sub-courses:

- Neural Networks and Deep Learning: basically, you will learn how to build your first deep neural network using numpy;

- Improving Deep Neural Networks: basically, you will learn how to tune up your deep neural network;

- Structuring Machine Learning Projects: basically, you will get experiences on certain scenarios without having to spend years working on machine learning projects;

- Convolutional Neural Networks:basically, computer vision;

- Sequence Models: basically, speech recognition and NLP;

- The coolest Programming assignments: basically, now what can you do?

- What's next?

Neural Networks and Deep Learning

- Understand the major technology trends driving Deep Learning

- Be able to build, train and apply fully connected deep neural networks

- Know how to implement efficient (vectorized) neural networks

- Understand the key parameters in a neural network's architecture

My opinion:

I recommend this course to those who already have a theoretical idea of how a deep neural network works and want to deepen the practical aspect (you will implement a deep neural network with numpy, without a framework and is very good thing for a beginners). For all the others starting from scratch and wanting to have a good understanding of the subject, I suggest to first retrieve the introductory course, again by prof. Andrew, called Machine Learning.

Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

- Understand industry best-practices for building deep learning applications.

- Be able to effectively use the common neural network "tricks", including initialization, L2 and dropout regularization, Batch normalization, gradient checking,

- Be able to implement and apply a variety of optimization algorithms, such as mini-batch gradient descent, Momentum, RMSprop and Adam, and check for their convergence.

- Understand new best-practices for the deep learning era of how to set up train/dev/test sets and analyze bias/variance

- Be able to implement a neural network in TensorFlow.

My opinion:

This second course is the natural continuation of the previous one. In the first course, you learned how to build a deep neural network from scratch, now you learn how to better initialize parameters, how to accelerate the process with optimized algorithms, how to tune up the hyperparameters and you will practice with Tensorflow. I find this course a bit more difficult than the first one, but I think these concepts are fundamental and the first course alone is not enough.

Structuring Machine Learning Projects

-

After 2 weeks, you will:

- Understand how to diagnose errors in a machine learning system

- Be able to prioritize the most promising directions for reducing error

- Understand complex ML settings, such as mismatched training/test sets, and comparing to and/or surpassing human-level performance

- Know how to apply end-to-end learning, transfer learning, and multi-task learning

My opinion:

This is the most important of the other 5 because these contents are almost exclusive or in this course. It will inject in you the best advice learned from years of experience on ML projects and suggest the best strategies for real problems in the real world.

Convolutional Neural Networks

- Understand how to build a convolutional neural network, including recent variations such as residual networks.

- Know how to apply convolutional networks to visual detection and recognition tasks.

- Know to use neural style transfer to generate art.

- Be able to apply these algorithms to a variety of image, video, and other 2D or 3D data

My opinion:

This is the most difficult course of the 5th, but also the one that gives more satisfaction. Scientists working on artificial vision have had incredible ideas and have achieved impressive results. Unfortunately, this course is not enough to master these techniques, but it is certainly an excellent first step to introduce yourself into this new world.

Sequence Models

- Understand how to build and train Recurrent Neural Networks (RNNs), and commonly-used variants such as GRUs and LSTMs.

- Be able to apply sequence models to natural language problems, including text synthesis.

- Be able to apply sequence models to audio applications, including speech recognition and music synthesis.

My opinion:

This is the course that I liked less. Unfortunately, I do not feel very comfortable using neural networks for tasks where logical reasoning would be needed (such as understanding the text) but I recognize that this is the state of the art, because it works better. Also in this case the course provides some basic tools to orientate yourself in the world of NLP but it is not at all sufficient to be able to say to master it.

The coolest Programming assignments

Using a SIGNS data set, I built a deep neural network model to recognize numbers from 0 to 5 in sign language with impressive precision.

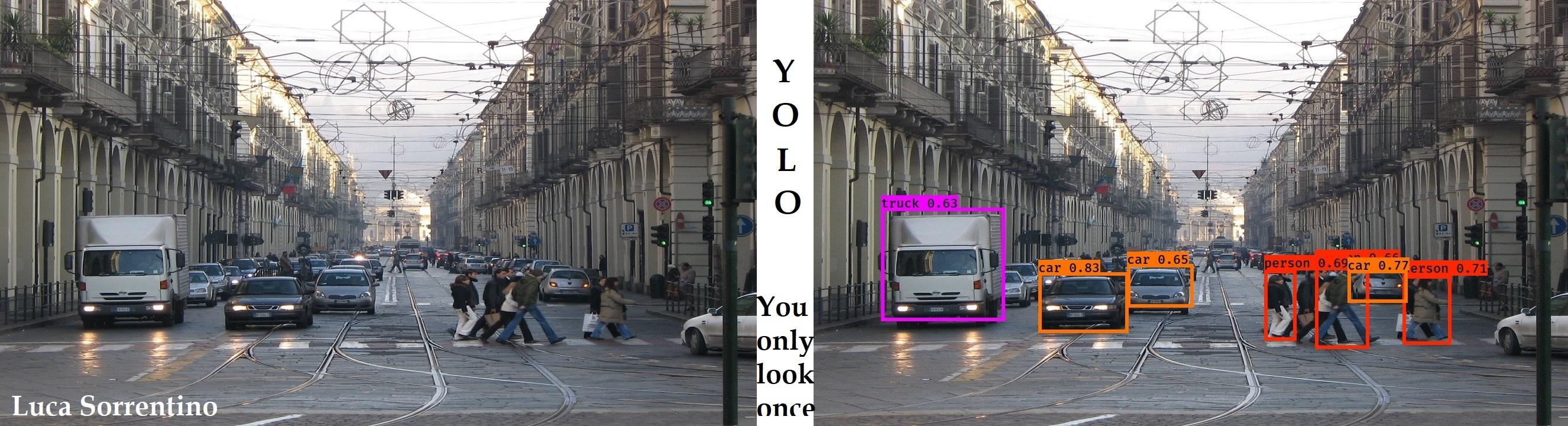

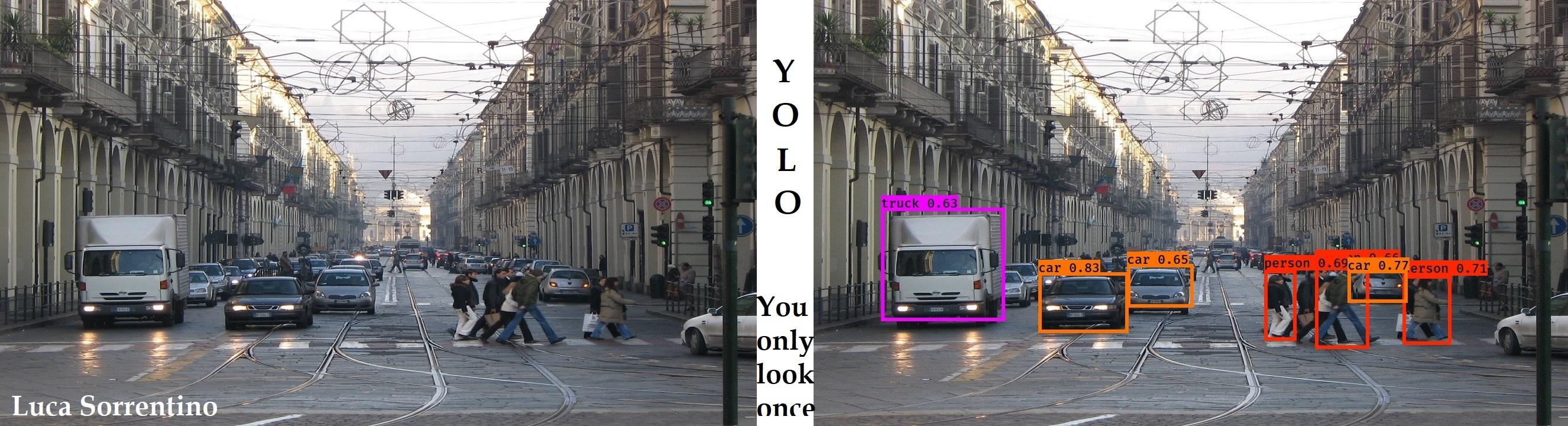

I applied YOLO algorithm (a state-of-the-art object detection model) on Turin.

Live version of YOLO algorithm with Raspberry camera.

Experiments with style transfer (the technique of recomposing images in the style of other images).

What's next?

Now that I have finished all 5 courses I have a perfect mastery of deep learning and I do not need to study anymore ... obviously, I'm joking. I'm still at the beginning, now it's the time to do exercises and start concrete projects by applying what has been learned.

Now that I have finished all 5 courses I have a perfect mastery of deep learning and I do not need to study anymore ... obviously, I'm joking. I'm still at the beginning, now it's the time to do exercises and start concrete projects by applying what has been learned.